11111111

This commit is contained in:

commit

f322af6014

|

|

@ -0,0 +1,201 @@

|

|||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

|

||||

1. Definitions.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||

unless required by applicable law (such as deliberate and grossly

|

||||

negligent acts) or agreed to in writing, shall any Contributor be

|

||||

liable to You for damages, including any direct, indirect, special,

|

||||

incidental, or consequential damages of any character arising as a

|

||||

result of this License or out of the use or inability to use the

|

||||

Work (including but not limited to damages for loss of goodwill,

|

||||

work stoppage, computer failure or malfunction, or any and all

|

||||

other commercial damages or losses), even if such Contributor

|

||||

has been advised of the possibility of such damages.

|

||||

|

||||

9. Accepting Warranty or Additional Liability. While redistributing

|

||||

the Work or Derivative Works thereof, You may choose to offer,

|

||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||

or other liability obligations and/or rights consistent with this

|

||||

License. However, in accepting such obligations, You may act only

|

||||

on Your own behalf and on Your sole responsibility, not on behalf

|

||||

of any other Contributor, and only if You agree to indemnify,

|

||||

defend, and hold each Contributor harmless for any liability

|

||||

incurred by, or claims asserted against, such Contributor by reason

|

||||

of your accepting any such warranty or additional liability.

|

||||

|

||||

END OF TERMS AND CONDITIONS

|

||||

|

||||

APPENDIX: How to apply the Apache License to your work.

|

||||

|

||||

To apply the Apache License to your work, attach the following

|

||||

boilerplate notice, with the fields enclosed by brackets "[]"

|

||||

replaced with your own identifying information. (Don't include

|

||||

the brackets!) The text should be enclosed in the appropriate

|

||||

comment syntax for the file format. We also recommend that a

|

||||

file or class name and description of purpose be included on the

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright [yyyy] [name of copyright owner]

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

|

|

@ -0,0 +1,691 @@

|

|||

<div align="center">

|

||||

<img src='docs/img/flgo_icon.png' width="200"/>

|

||||

<h1>FLGo: A Lightning Framework for Federated Learning</h1>

|

||||

|

||||

<!-- [](https://pypi.org/project/flgo/)

|

||||

[](https://pypi.org/project/flgo/) -->

|

||||

[](https://pypi.org/project/flgo/)

|

||||

[](https://flgo-xmu.github.io/)

|

||||

[](https://github.com/WwZzz/easyFL/blob/FLGo/LICENSE)

|

||||

|

||||

|

||||

</div>

|

||||

|

||||

|

||||

<!-- ## Major Feature -->

|

||||

|

||||

[//]: # (## Table of Contents)

|

||||

|

||||

[//]: # (- [Introduction](#Introduction))

|

||||

|

||||

[//]: # (- [QuickStart](#Quick Start with 3 lines))

|

||||

|

||||

[//]: # (- [Architecture](#Architecture))

|

||||

|

||||

[//]: # (- [Citation](#Citation))

|

||||

|

||||

[//]: # (- [Contacts](#Contacts))

|

||||

|

||||

[//]: # (- [References](#References))

|

||||

|

||||

[//]: # (- )

|

||||

# Introduction

|

||||

FLGo is a library to conduct experiments about Federated Learning (FL). It is strong and reusable for research on FL, providing comprehensive easy-to-use modules to hold out for those who want to do various federated learning experiments.

|

||||

|

||||

## Installation

|

||||

* Install FLGo through pip. It's recommended to install pytorch by yourself before installing this library.

|

||||

```sh

|

||||

pip install flgo --upgrade

|

||||

```

|

||||

* Install FLGo through git

|

||||

```sh

|

||||

git clone https://github.com/WwZzz/easyFL.git

|

||||

```

|

||||

## Join Us :smiley:

|

||||

Welcome to our FLGo's WeChat group/QQ Group for more technical discussion.

|

||||

|

||||

<center>

|

||||

<!-- <img src="https://github.com/user-attachments/assets/230247bc-8fce-4821-901b-d0e22ca360fd" width=180/> -->

|

||||

|

||||

<img src="https://github.com/user-attachments/assets/39070ba7-4752-46ec-b8b4-3d5591992595" width=180/>

|

||||

</center>

|

||||

|

||||

Group Number: 838298386

|

||||

|

||||

Tutorials in Chinese can be found [here](https://www.zhihu.com/column/c_1618319253936984064)

|

||||

# News

|

||||

**[2024.9.20]** We present a comprehensive benchmark gallery [here](https://github.com/WwZzz/FLGo-Bench)

|

||||

|

||||

**[2024.8.01]** Improving efficiency by sharing datasets across multiple processes within each task in the shared memory

|

||||

|

||||

# Quick Start with 3 lines :zap:

|

||||

```python

|

||||

import flgo

|

||||

import flgo.benchmark.mnist_classification as mnist

|

||||

import flgo.benchmark.partition as fbp

|

||||

import flgo.algorithm.fedavg as fedavg

|

||||

|

||||

# Line 1: Create a typical federated learning task

|

||||

flgo.gen_task_by_(mnist, fbp.IIDPartitioner(num_clients=100), './my_task')

|

||||

|

||||

# Line 2: Running FedAvg on this task

|

||||

fedavg_runner = flgo.init('./my_task', fedavg, {'gpu': [0,], 'num_rounds':20, 'num_epochs': 1})

|

||||

|

||||

# Line 3: Start Training

|

||||

fedavg_runner.run()

|

||||

```

|

||||

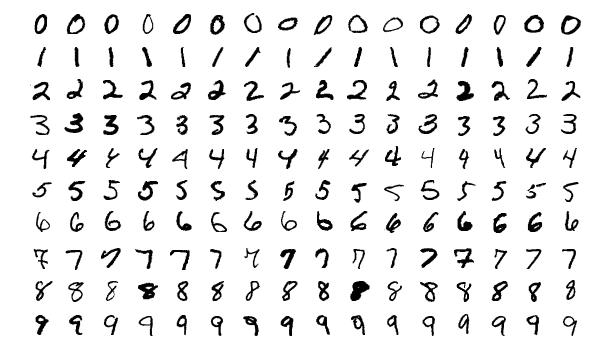

We take a classical federated dataset, Federated MNIST, as the example. The MNIST dataset is splitted into 100 parts identically and independently.

|

||||

|

||||

Line 1 creates the federated dataset as `./my_task` and visualizes it in `./my_task/res.png`

|

||||

|

||||

|

||||

Lines 2 and 3 start the training procedure and outputs information to the console

|

||||

```

|

||||

2024-04-15 02:30:43,763 fflow.py init [line:642] INFO PROCESS ID: 552206

|

||||

2024-04-15 02:30:43,763 fflow.py init [line:643] INFO Initializing devices: cuda:0 will be used for this running.

|

||||

2024-04-15 02:30:43,763 fflow.py init [line:646] INFO BENCHMARK: flgo.benchmark.mnist_classification

|

||||

2024-04-15 02:30:43,763 fflow.py init [line:647] INFO TASK: ./my_task

|

||||

2024-04-15 02:30:43,763 fflow.py init [line:648] INFO MODEL: flgo.benchmark.mnist_classification.model.cnn

|

||||

2024-04-15 02:30:43,763 fflow.py init [line:649] INFO ALGORITHM: fedavg

|

||||

2024-04-15 02:30:43,774 fflow.py init [line:688] INFO SCENE: horizontal FL with 1 <class 'flgo.algorithm.fedbase.BasicServer'>, 100 <class 'flgo.algorithm.fedbase.BasicClient'>

|

||||

2024-04-15 02:30:47,851 fflow.py init [line:705] INFO SIMULATOR: <class 'flgo.simulator.default_simulator.Simulator'>

|

||||

2024-04-15 02:30:47,853 fflow.py init [line:718] INFO Ready to start.

|

||||

...

|

||||

2024-04-15 02:30:52,466 fedbase.py run [line:253] INFO --------------Round 1--------------

|

||||

2024-04-15 02:30:52,466 simple_logger.py log_once [line:14] INFO Current_time:1

|

||||

2024-04-15 02:30:54,402 simple_logger.py log_once [line:28] INFO test_accuracy 0.6534

|

||||

2024-04-15 02:30:54,402 simple_logger.py log_once [line:28] INFO test_loss 1.5835

|

||||

...

|

||||

```

|

||||

* **Show Training Result (optional)**

|

||||

```python

|

||||

import flgo.experiment.analyzer as fea

|

||||

# Create the analysis plan

|

||||

analysis_plan = {

|

||||

'Selector':{'task': './my_task', 'header':['fedavg',], },

|

||||

'Painter':{'Curve':[{'args':{'x':'communication_round', 'y':'val_loss'}}]},

|

||||

}

|

||||

|

||||

fea.show(analysis_plan)

|

||||

```

|

||||

Each training result will be saved as a record under `./my_task/record`. We can use the built-in analyzer to read and show it.

|

||||

|

||||

|

||||

|

||||

# Why Using FLGo? :hammer_and_wrench:

|

||||

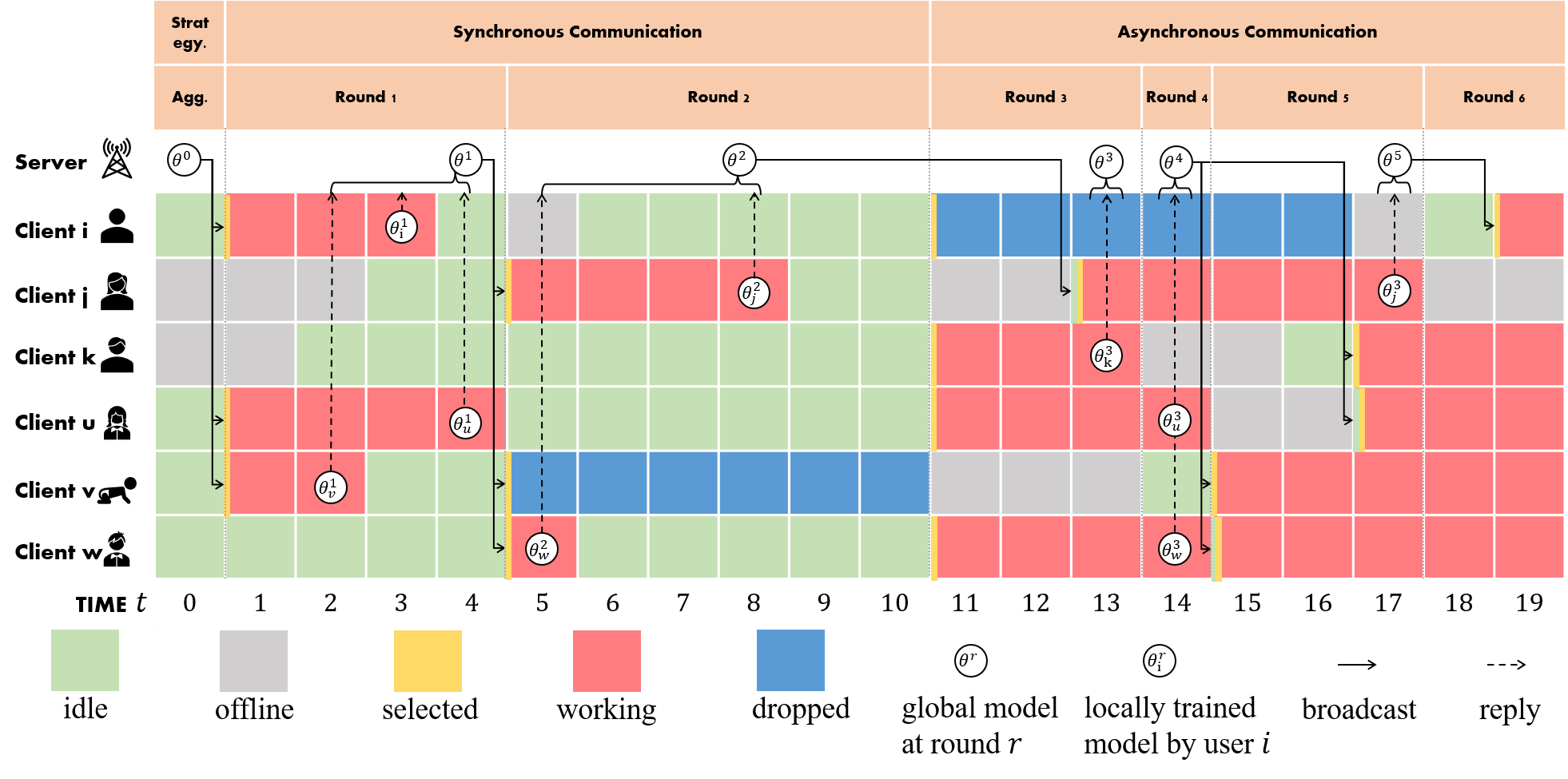

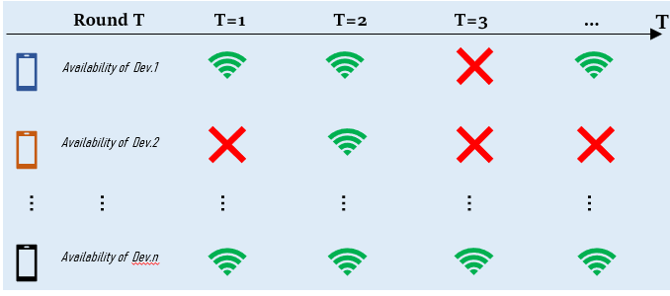

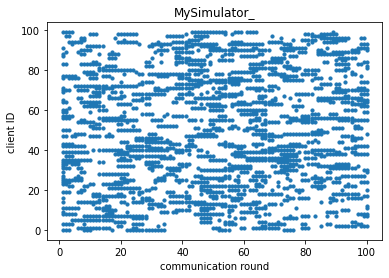

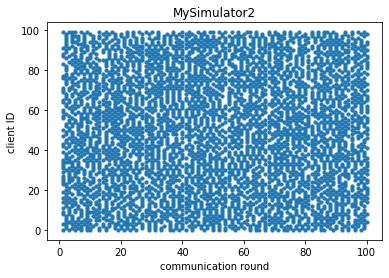

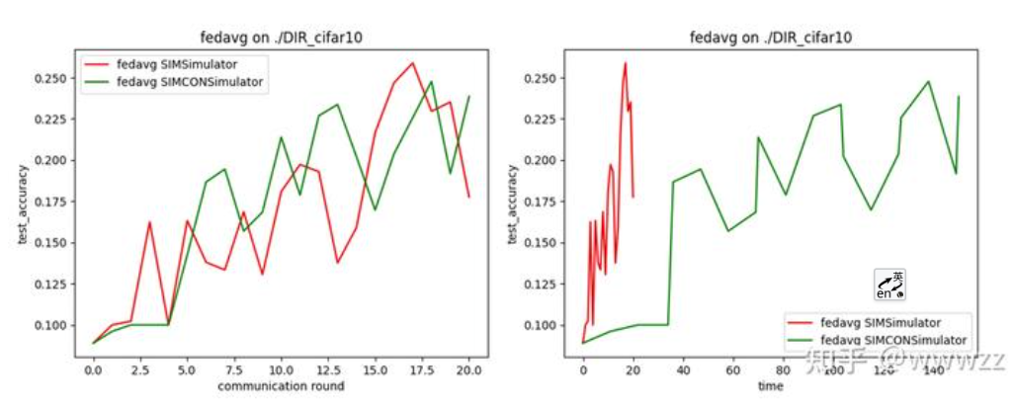

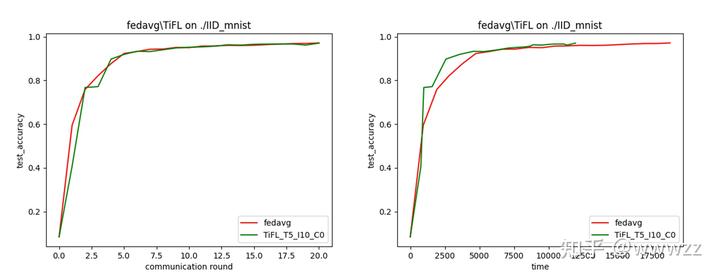

## Simulate Real-World System Heterogeneity :iphone:

|

||||

|

||||

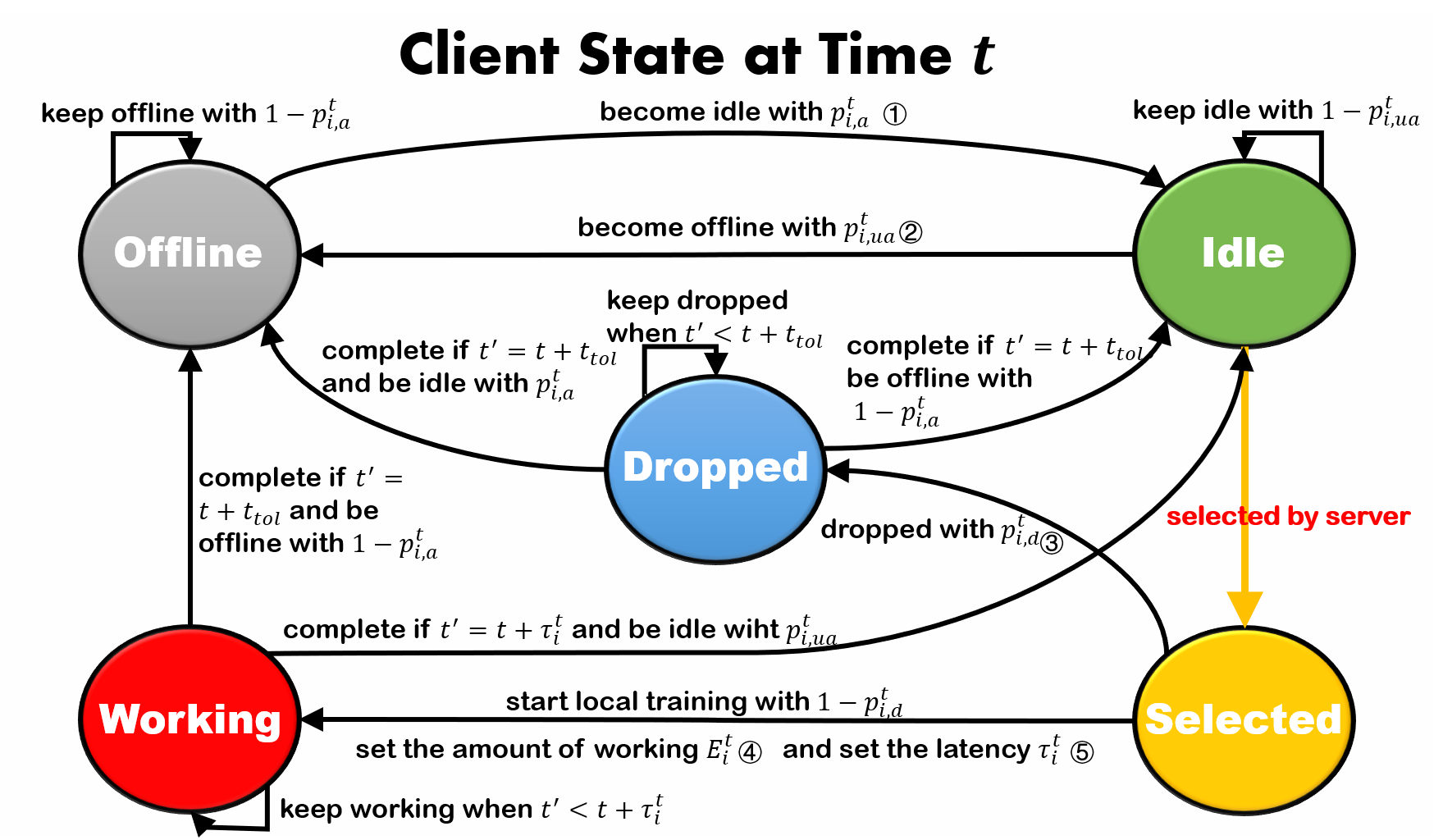

Our FLGo supports running different algorithms in virtual environments like real-world. For example, clients in practice may

|

||||

* *be sometime inavailable*,

|

||||

* *response to the server very slow*,

|

||||

* *accidiently lose connection*,

|

||||

* *upload incomplete model updates*,

|

||||

* ...

|

||||

|

||||

All of these behavior can be easily realized by integrating a simple `Simulator` to the runner like

|

||||

```python

|

||||

import flgo

|

||||

from flgo.simulator import ExampleSimulator

|

||||

import flgo.algorithm.fedavg as fedavg

|

||||

|

||||

fedavg_runner = flgo.init('./my_task', fedavg, {'gpu': [0,]}, simulator=ExampleSimulator)

|

||||

fedavg_runner.run()

|

||||

```

|

||||

|

||||

`Simulator` is fully customizable and can fairly reflect the impact of system heterogeneity on different algorithms. Please refer to [Paper](https://arxiv.org/abs/2306.12079) or [Tutorial](https://flgo-xmu.github.io/Tutorials/4_Simulator_Customization/) for more details.

|

||||

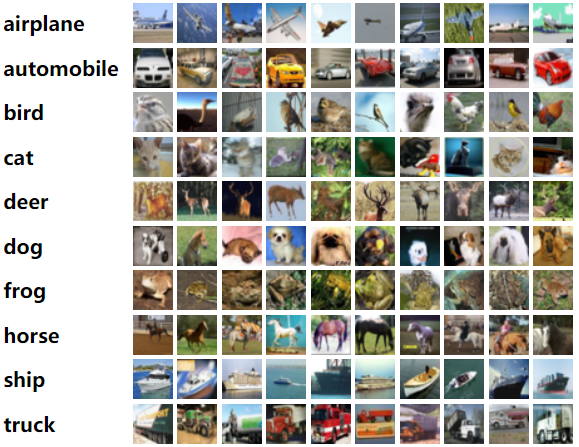

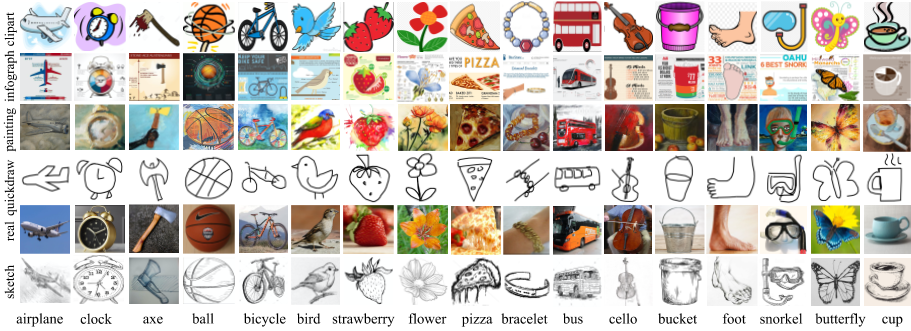

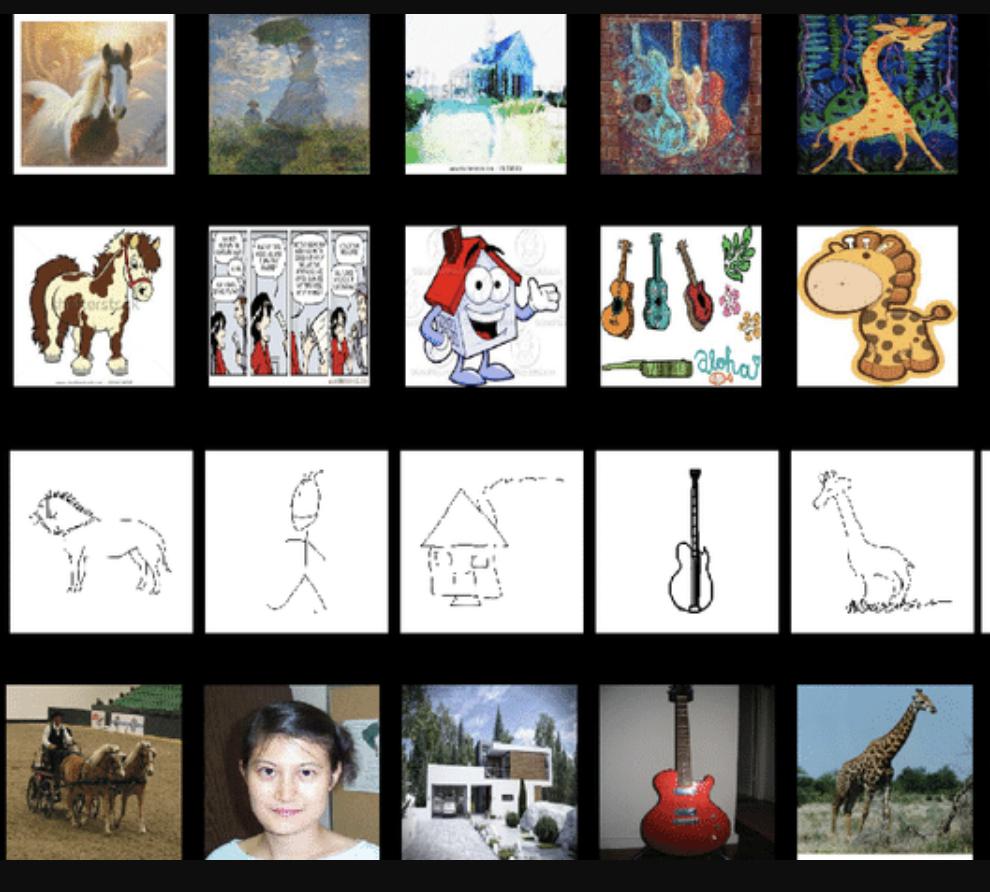

## Comprehensive Benchmarks :family_woman_woman_boy_boy:

|

||||

FLGo provides more than 50 benchmarks across different data types, different communication topology,...

|

||||

<table>

|

||||

<tr>

|

||||

<td>

|

||||

<td>Task

|

||||

<td>Scenario

|

||||

<td>Datasets

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td rowspan=3>CV

|

||||

<td>Classification

|

||||

<td>Horizontal & Vertical

|

||||

<td>CIFAR10\100, MNIST, FashionMNIST,FEMNIST, EMNIST, SVHN

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Detection

|

||||

<td>Horizontal

|

||||

<td>Coco, VOC

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Segmentation

|

||||

<td>Horizontal

|

||||

<td>Coco, SBDataset

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td rowspan=3>NLP

|

||||

<td>Classification

|

||||

<td>Horizontal

|

||||

<td>Sentiment140, AG_NEWS, sst2

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Text Prediction

|

||||

<td>Horizontal

|

||||

<td>Shakespeare, Reddit

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Translation

|

||||

<td>Horizontal

|

||||

<td>Multi30k

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td rowspan=3>Graph

|

||||

<td>Node Classification

|

||||

<td>Horizontal

|

||||

<td>Cora, Citeseer, Pubmed

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Link Prediction

|

||||

<td>Horizontal

|

||||

<td>Cora, Citeseer, Pubmed

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Graph Classification

|

||||

<td>Horizontal

|

||||

<td>Enzymes, Mutag

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Recommendation

|

||||

<td>Rating Prediction

|

||||

<td>Horizontal & Vertical

|

||||

<td>Ciao, Movielens, Epinions, Filmtrust, Douban

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Series

|

||||

<td>Time series forecasting

|

||||

<td>Horizontal

|

||||

<td>Electricity, Exchange Rate

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Tabular

|

||||

<td>Classification

|

||||

<td>Horizontal

|

||||

<td>Adult, Bank Marketing

|

||||

<td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td>Synthetic

|

||||

<td>Regression

|

||||

<td>Horizontal

|

||||

<td>Synthetic, DistributedQP, CUBE

|

||||

<td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

### Usage

|

||||

Each benchmark can be used to generate federated tasks that denote distributed scenes with specific data distributions like

|

||||

|

||||

```python

|

||||

import flgo

|

||||

import flgo.benchmark.cifar10_classification as cifar10

|

||||

import flgo.benchmark.partition as fbp

|

||||

import flgo.algorithm.fedavg as fedavg

|

||||

|

||||

task = './my_first_cifar' # task name

|

||||

flgo.gen_task_by_(cifar10, fbp.IIDPartitioner(num_clients=10), task) # generate task from benchmark with partitioner

|

||||

flgo.init(task, fedavg, {'gpu':0}).run()

|

||||

```

|

||||

|

||||

|

||||

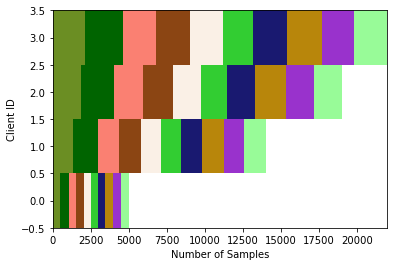

## Visualized Data Heterogeneity :eyes:

|

||||

We realize data heterogeneity by flexible partitioners. These partitioners can be easily combined with `benchmark` to generate federated tasks with different data distributions.

|

||||

```python

|

||||

import flgo.benchmark.cifar10_classification as cifar10

|

||||

import flgo.benchmark.partition as fbp

|

||||

```

|

||||

#### Dirichlet(α) of labels

|

||||

```python

|

||||

flgo.gen_task_by_(cifar10, fbp.DirichletPartitioner(num_clients=100, alpha=0.1), 'dir0.1_cifar')

|

||||

flgo.gen_task_by_(cifar10, fbp.DirichletPartitioner(num_clients=100, alpha=1.0), 'dir1.0_cifar')

|

||||

flgo.gen_task_by_(cifar10, fbp.DirichletPartitioner(num_clients=100, alpha=5.0), 'dir5.0_cifar')

|

||||

flgo.gen_task_by_(cifar10, fbp.DirichletPartitioner(num_clients=100, alpha=10.0), 'dir10.0_cifar')

|

||||

```

|

||||

|

||||

#### Controllable Data Imbalance

|

||||

```python

|

||||

# set imbalance=0.1, 0.3, 0.6 or 1.0

|

||||

flgo.gen_task_by_(cifar10, fbp.DirichletPartitioner(num_clients=100, alpha=1.0, imbalance=0.1), 'dir1.0_cifar_imb0.1')

|

||||

flgo.gen_task_by_(cifar10, fbp.DirichletPartitioner(num_clients=100, alpha=1.0, imbalance=0.3), 'dir1.0_cifar_imb0.3')

|

||||

flgo.gen_task_by_(cifar10, fbp.DirichletPartitioner(num_clients=100, alpha=1.0, imbalance=0.6), 'dir1.0_cifar_imb0.6')

|

||||

flgo.gen_task_by_(cifar10, fbp.DirichletPartitioner(num_clients=100, alpha=1.0, imbalance=1.0), 'dir1.0_cifar_imb1.0')

|

||||

```

|

||||

|

||||

#### Heterogeneous Label Diversity

|

||||

```python

|

||||

flgo.gen_task_by_(cifar10, fbp.DiversityPartitioner(num_clients=100, diversity=0.1), 'div0.1_cifar')

|

||||

flgo.gen_task_by_(cifar10, fbp.DiversityPartitioner(num_clients=100, diversity=0.3), 'div0.3_cifar')

|

||||

flgo.gen_task_by_(cifar10, fbp.DiversityPartitioner(num_clients=100, diversity=0.6), 'div0.6_cifar')

|

||||

flgo.gen_task_by_(cifar10, fbp.DiversityPartitioner(num_clients=100, diversity=1.0), 'div1.0_cifar')

|

||||

```

|

||||

|

||||

|

||||

`Partitioner` is also customizable in flgo. We have provided a detailed example in this [Tutorial](https://flgo-xmu.github.io/Tutorials/3_Benchmark_Customization/3.7_Data_Heterogeneity/).

|

||||

|

||||

## Reproduction of Algorithms from TOP-tiers and Journals :1st_place_medal:

|

||||

We have realized more than 50 algorithms from TOP-tiers and Journals. The algorithms are listed as below

|

||||

|

||||

#### Classical FL & Data Heterogeneity

|

||||

|

||||

| Method | Reference | Publication |

|

||||

|----------|-----------|-------------|

|

||||

| FedAvg | [link](http://arxiv.org/abs/1602.05629) | AISTAS2017 |

|

||||

| FedProx | [link](http://arxiv.org/abs/1812.06127) | MLSys 2020 |

|

||||

| Scaffold | [link](http://arxiv.org/abs/1910.06378) | ICML 2020 |

|

||||

| FedDyn | [link](http://arxiv.org/abs/2111.04263) | ICLR 2021 |

|

||||

| MOON | [link](http://arxiv.org/abs/2103.16257) | CVPR 2021 |

|

||||

| FedNova | [link](http://arxiv.org/abs/2007.07481) | NIPS 2021 |

|

||||

| FedAvgM | [link](https://arxiv.org/abs/1909.06335) | arxiv |

|

||||

| GradMA |[link](http://arxiv.org/abs/2302.14307) | CVPR 2023 |

|

||||

|

||||

|

||||

#### Personalized FL

|

||||

| Method | Reference | Publication |

|

||||

|-----------------|-----------------------------------------------------------------|--------------------|

|

||||

| Standalone | [link](http://arxiv.org/abs/1602.05629) | - |

|

||||

| FedAvg+FineTune | - | - |

|

||||

| Ditto | [link](http://arxiv.org/abs/2007.14390) | ICML 2021 |

|

||||

| FedALA | [link](http://arxiv.org/abs/2212.01197) | AAAI 2023 |

|

||||

| FedRep | [link](http://arxiv.org/abs/2102.07078) | ICML 2021 |

|

||||

| pFedMe | [link](http://arxiv.org/abs/2006.08848) | NIPS 2020 |

|

||||

| Per-FedAvg | [link](http://arxiv.org/abs/2002.07948) | NIPS 2020 |

|

||||

| FedAMP | [link](http://arxiv.org/abs/2007.03797) | AAAI 2021 |

|

||||

| FedFomo | [link](http://arxiv.org/abs/2012.08565) | ICLR 2021 |

|

||||

| LG-FedAvg | [link](http://arxiv.org/abs/2001.01523) | NIPS 2019 workshop |

|

||||

| pFedHN | [link](https://proceedings.mlr.press/v139/shamsian21a.html) | ICML 2021 |

|

||||

| Fed-ROD | [link](https://openreview.net/forum?id=I1hQbx10Kxn) | ICLR 2023 |

|

||||

| FedPAC | [link](http://arxiv.org/abs/2306.11867) | ICLR 2023 |

|

||||

| FedPer | [link](http://arxiv.org/abs/1912.00818) | AISTATS 2020 |

|

||||

| APPLE | [link](https://www.ijcai.org/proceedings/2022/301) | IJCAI 2022 |

|

||||

| FedBABU | [link](http://arxiv.org/abs/2106.06042) | ICLR 2022 |

|

||||

| FedBN | [link](https://openreview.net/pdf?id=6YEQUn0QICG) | ICLR 2021 |

|

||||

| FedPHP | [link](https://dl.acm.org/doi/abs/10.1007/978-3-030-86486-6_36) | ECML/PKDD 2021 |

|

||||

| APFL | [link](http://arxiv.org/abs/2003.13461) | arxiv |

|

||||

| FedProto | [link](https://ojs.aaai.org/index.php/AAAI/article/view/20819) | AAAI 2022 |

|

||||

| FedCP | [link](http://arxiv.org/abs/2307.01217) | KDD 2023 |

|

||||

| GPFL | [link](http://arxiv.org/abs/2308.10279) | ICCV 2023 |

|

||||

| pFedPara | [link](http://arxiv.org/abs/2108.06098) | ICLR 2022 |

|

||||

| FedFA | [link](https://arxiv.org/abs/2301.12995) | ICLR 2023 |

|

||||

|

||||

#### Fairness-Aware FL

|

||||

| Method |Reference| Publication |

|

||||

|----------|---|---------------------------|

|

||||

| AFL |[link](http://arxiv.org/abs/1902.00146) | ICML 2019 |

|

||||

| FedFv |[link](http://arxiv.org/abs/2104.14937) | IJCAI 2021 |

|

||||

| FedFa |[link](http://arxiv.org/abs/2012.10069) | Information Sciences 2022 |

|

||||

| FedMgda+ |[link](http://arxiv.org/abs/2006.11489) | IEEE TNSE 2022 |

|

||||

| QFedAvg |[link](http://arxiv.org/abs/1905.10497) | ICLR 2020 |

|

||||

|

||||

#### Asynchronous FL

|

||||

| Method |Reference| Publication |

|

||||

|----------|---|--------------|

|

||||

| FedAsync |[link](http://arxiv.org/abs/1903.03934) | arxiv |

|

||||

| FedBuff |[link](http://arxiv.org/abs/2106.06639) | AISTATS 2022 |

|

||||

| CA2FL |[link](https://openreview.net/forum?id=4aywmeb97I) | ICLR2024 |

|

||||

#### Client Sampling & Heterogeneous Availability

|

||||

| Method |Reference| Publication |

|

||||

|-------------------|---|--------------|

|

||||

| MIFA |[link](http://arxiv.org/abs/2106.04159) | NeurIPS 2021 |

|

||||

| PowerofChoice |[link](http://arxiv.org/abs/2010.13723) | arxiv |

|

||||

| FedGS |[link](https://arxiv.org/abs/2211.13975) | AAAI 2023 |

|

||||

| ClusteredSampling |[link](http://arxiv.org/abs/2105.05883) | ICML 2021 |

|

||||

|

||||

|

||||

#### Capacity Heterogeneity

|

||||

| Method | Reference | Publication |

|

||||

|------------------|------------------------------------------------------------------------------------------------------------------------------|--------------|

|

||||

| FederatedDropout | [link](http://arxiv.org/abs/1812.07210) | arxiv |

|

||||

| FedRolex | [link](https://openreview.net/forum?id=OtxyysUdBE) | NIPS 2022 |

|

||||

| Fjord | [link](https://proceedings.neurips.cc/paper/2021/hash/6aed000af86a084f9cb0264161e29dd3-Abstract.html) | NIPS 2021 |

|

||||

| FLANC | [link](https://proceedings.neurips.cc/paper_files/paper/2022/hash/1b61ad02f2da8450e08bb015638a9007-Abstract-Conference.html) | NIPS 2022 |

|

||||

| Hermes | [link](https://dl.acm.org/doi/10.1145/3447993.3483278) | MobiCom 2021 |

|

||||

| FedMask | [link](https://dl.acm.org/doi/10.1145/3485730.3485929) | SenSys 2021 |

|

||||

| LotteryFL | [link](http://arxiv.org/abs/2008.03371) | arxiv |

|

||||

| HeteroFL | [link](http://arxiv.org/abs/2010.01264) | ICLR 2021 |

|

||||

| TailorFL | [link](https://dl.acm.org/doi/10.1145/3560905.3568503) | SenSys 2022 |

|

||||

| pFedGate | [link](http://arxiv.org/abs/2305.02776) | ICML 2023 |

|

||||

|

||||

## Combine All The Things Together! :bricks:

|

||||

<img src="https://github.com/WwZzz/myfigs/blob/master/readme_flgo_com.png?raw=true" width=500/>

|

||||

|

||||

FLGo supports flexible combinations of benchmarks, partitioners, algorithms and simulators , which are independent to each other and thus can be used like plugins. We have provided these plugins [here](https://github.com/WwZzz/easyFL/tree/FLGo/resources) , where each can be immediately downloaded and used by API

|

||||

|

||||

```python

|

||||

import flgo

|

||||

import flgo.benchmark.partition as fbp

|

||||

|

||||

fedavg = flgo.download_resource(root='.', name='fedavg', type='algorithm')

|

||||

mnist = flgo.download_resource(root='.', name='mnist_classification', type='benchmark')

|

||||

task = 'test_down_mnist'

|

||||

flgo.gen_task_by_(mnist,fbp.IIDPartitioner(num_clients=10,), task_path=task)

|

||||

flgo.init(task, fedavg, {'gpu':0}).run()

|

||||

```

|

||||

|

||||

[//]: # (## Multiple Communication Topology Support)

|

||||

## Easy-to-use Experimental Tools :toolbox:

|

||||

### Load Results

|

||||

Each runned result will be automatically saved in `task_path/record/`. We provide an API to easily load and filter records.

|

||||

```python

|

||||

import flgo

|

||||

import flgo.experiment.analyzer as fea

|

||||

import matplotlib.pyplot as plt

|

||||

res = fea.Selector({'task': './my_task', 'header':['fedavg',], },)

|

||||

log_data = res.records['./my_task'][0].data

|

||||

val_loss = log_data['val_loss']

|

||||

plt.plot(list(range(len(val_loss))), val_loss)

|

||||

plt.show()

|

||||

```

|

||||

|

||||

### Use Checkpoint

|

||||

```python

|

||||

import flgo.algorithm.fedavg as fedavg

|

||||

import flgo.experiment.analyzer

|

||||

|

||||

task = './my_task'

|

||||

ckpt = '1'

|

||||

runner = flgo.init(task, fedavg, {'gpu':[0,],'log_file':True, 'num_epochs':1, 'save_checkpoint':ckpt, 'load_checkpoint':ckpt})

|

||||

runner.run()

|

||||

```

|

||||

We save each checkpoint at `task_path/checkpoint/checkpoint_name/`. By specifying the name of checkpoints, the training can be automatically recovered from them.

|

||||

```python

|

||||

import flgo.algorithm.fedavg as fedavg

|

||||

# the two methods need to be extended when using other algorithms

|

||||

class Server(fedavg.Server):

|

||||

def save_checkpoint(self):

|

||||

cpt = {

|

||||

'round': self.current_round, # current communication round

|

||||

'learning_rate': self.learning_rate, # learning rate

|

||||

'model_state_dict': self.model.state_dict(), # model

|

||||

'early_stop_option': { # early stop option

|

||||

'_es_best_score': self.gv.logger._es_best_score,

|

||||

'_es_best_round': self.gv.logger._es_best_round,

|

||||

'_es_patience': self.gv.logger._es_patience,

|

||||

},

|

||||

'output': self.gv.logger.output, # recorded information by Logger

|

||||

'time': self.gv.clock.current_time, # virtual time

|

||||

}

|

||||

return cpt

|

||||

|

||||

def load_checkpoint(self, cpt):

|

||||

md = cpt.get('model_state_dict', None)

|

||||

round = cpt.get('round', None)

|

||||

output = cpt.get('output', None)

|

||||

early_stop_option = cpt.get('early_stop_option', None)

|

||||

time = cpt.get('time', None)

|

||||

learning_rate = cpt.get('learning_rate', None)

|

||||

if md is not None: self.model.load_state_dict(md)

|

||||

if round is not None: self.current_round = round + 1

|

||||

if output is not None: self.gv.logger.output = output

|

||||

if time is not None: self.gv.clock.set_time(time)

|

||||

if learning_rate is not None: self.learning_rate = learning_rate

|

||||

if early_stop_option is not None:

|

||||

self.gv.logger._es_best_score = early_stop_option['_es_best_score']

|

||||

self.gv.logger._es_best_round = early_stop_option['_es_best_round']

|

||||

self.gv.logger._es_patience = early_stop_option['_es_patience']

|

||||

```

|

||||

**Note**: different FL algorithms need to save different types of checkpoints. Here we only provide checkpoint save&load mechanism of FedAvg. We remain two APIs for customization above:

|

||||

|

||||

### Use Logger

|

||||

We show how to use customized Logger [Here](https://flgo-xmu.github.io/Tutorials/1_Configuration/1.6_Logger_Configuration/)

|

||||

## Tutorials and Documents :page_with_curl:

|

||||

We have provided comprehensive [Tutorials](https://flgo-xmu.github.io/Tutorials/) and [Document](https://flgo-xmu.github.io/Docs/FLGo/) for FLGo.

|

||||

## Deployment To Real Machines :computer:

|

||||

Our FLGo is able to be extended to real-world application. We provide a simple [Example](https://github.com/WwZzz/easyFL/tree/FLGo/example/realworld_case) to show how to run FLGo on multiple machines.

|

||||

|

||||

|

||||

|

||||

|

||||

# Overview :notebook:

|

||||

|

||||

### Options

|

||||

|

||||

Basic options:

|

||||

|

||||

* `task` is to choose the task of splited dataset. Options: name of fedtask (e.g. `mnist_classification_client100_dist0_beta0_noise0`).

|

||||

|

||||

* `algorithm` is to choose the FL algorithm. Options: `fedfv`, `fedavg`, `fedprox`, …

|

||||

|

||||

* `model` should be the corresponding model of the dataset. Options: `mlp`, `cnn`, `resnet18.`

|

||||

|

||||

Server-side options:

|

||||

|

||||

* `sample` decides the way to sample clients in each round. Options: `uniform` means uniformly, `md` means choosing with probability.

|

||||

|

||||

* `aggregate` decides the way to aggregate clients' model. Options: `uniform`, `weighted_scale`, `weighted_com`

|

||||

|

||||

* `num_rounds` is the number of communication rounds.

|

||||

|

||||

* `proportion` is the proportion of clients to be selected in each round.

|

||||

|

||||

* `lr_scheduler` is the global learning rate scheduler.

|

||||

|

||||

* `learning_rate_decay` is the decay rate of the learning rate.

|

||||

|

||||

Client-side options:

|

||||

|

||||

* `num_epochs` is the number of local training epochs.

|

||||

|

||||

* `num_steps` is the number of local updating steps and the default value is -1. If this term is set larger than 0, `num_epochs` is not valid.

|

||||

|

||||

* `learning_rate ` is the step size when locally training.

|

||||

|

||||

* `batch_size ` is the size of one batch data during local training. `batch_size = full_batch` if `batch_size==-1` and `batch_size=|Di|*batch_size` if `1>batch_size>0`.

|

||||

|

||||

* `optimizer` is to choose the optimizer. Options: `SGD`, `Adam`.

|

||||

|

||||

* `weight_decay` is to set ratio for weight decay during the local training process.

|

||||

|

||||

* `momentum` is the ratio of the momentum item when the optimizer SGD taking each step.

|

||||

|

||||

Real Machine-Dependent options:

|

||||

|

||||

* `seed ` is the initial random seed.

|

||||

|

||||

* `gpu ` is the id of the GPU device. (e.g. CPU is used without specifying this term. `--gpu 0` will use device GPU 0, and `--gpu 0 1 2 3` will use the specified 4 GPUs when `num_threads`>0.

|

||||

|

||||

* `server_with_cpu ` is set False as default value,..

|

||||

|

||||

* `test_batch_size ` is the batch_size used when evaluating models on validation datasets, which is limited by the free space of the used device.

|

||||

|

||||

* `eval_interval ` controls the interval between every two evaluations.

|

||||

|

||||

* `num_threads` is the number of threads in the clients computing session that aims to accelerate the training process.

|

||||

|

||||

* `num_workers` is the number of workers of the torch.utils.data.Dataloader

|

||||

|

||||

Additional hyper-parameters for particular federated algorithms:

|

||||

|

||||

* `algo_para` is used to receive the algorithm-dependent hyper-parameters from command lines. Usage: 1) The hyper-parameter will be set as the default value defined in Server.__init__() if not specifying this term, 2) For algorithms with one or more parameters, use `--algo_para v1 v2 ...` to specify the values for the parameters. The input order depends on the dict `Server.algo_para` defined in `Server.__init__()`.

|

||||

|

||||

Logger's setting

|

||||

|

||||

* `logger` is used to selected the logger that has the same name with this term.

|

||||

|

||||

* `log_level` shares the same meaning with the LEVEL in the python's native module logging.

|

||||

|

||||

* `log_file` controls whether to store the running-time information into `.log` in `fedtask/taskname/log/`, default value is false.

|

||||

|

||||

* `no_log_console` controls whether to show the running time information on the console, and default value is false.

|

||||

|

||||

### More

|

||||

|

||||

To get more information and full-understanding of FLGo please refer to <a href='https://flgo-xmu.github.io/'>our website</a>.

|

||||

|

||||

In the website, we offer :

|

||||

|

||||

- API docs: Detailed introduction of packages, classes and methods.

|

||||

- Tutorial: Materials that help user to master FLGo.

|

||||

|

||||

## Architecture

|

||||

|

||||

We seperate the FL system into five parts:`algorithm`, `benchmark`, `experiment`, `simulator` and `utils`.

|

||||

```

|

||||

├─ algorithm

|

||||

│ ├─ fedavg.py //fedavg algorithm

|

||||

│ ├─ ...

|

||||

│ ├─ fedasync.py //the base class for asynchronous federated algorithms

|

||||

│ └─ fedbase.py //the base class for federated algorithms

|

||||

├─ benchmark

|

||||

│ ├─ mnist_classification //classification on mnist dataset

|

||||

│ │ ├─ model //the corresponding model

|

||||

│ | └─ core.py //the core supporting for the dataset, and each contains three necessary classes(e.g. TaskGen, TaskReader, TaskCalculator)

|

||||

│ ├─ ...

|

||||

│ ├─ RAW_DATA // storing the downloaded raw dataset

|

||||

│ └─ toolkits //the basic tools for generating federated dataset

|

||||

│ ├─ cv // common federal division on cv

|

||||

│ │ ├─ horizontal // horizontal fedtask

|

||||

│ │ │ └─ image_classification.py // the base class for image classification

|

||||

│ │ └─ ...

|

||||

│ ├─ ...

|

||||

│ ├─ base.py // the base class for all fedtask

|

||||

│ ├─ partition.py // the parttion class for federal division

|

||||

│ └─ visualization.py // visualization after the data set is divided

|

||||

├─ experiment

|

||||

│ ├─ logger //the class that records the experimental process

|

||||

│ │ ├─ basic_logger.py //the base logger class

|

||||

│ | └─ simple_logger.py //a simple logger class

|

||||

│ ├─ analyzer.py //the class for analyzing and printing experimental results

|

||||

│ ├─ res_config.yml //hyperparameter file of analyzer.py

|

||||

│ ├─ run_config.yml //hyperparameter file of runner.py

|

||||

| └─ runner.py //the class for generating experimental commands based on hyperparameter combinations and processor scheduling for all experimental

|

||||

├─ system_simulator //system heterogeneity simulation module

|

||||

│ ├─ base.py //the base class for simulate system heterogeneity

|

||||

│ ├─ default_simulator.py //the default class for simulate system heterogeneity

|

||||

| └─ ...

|

||||

├─ utils

|

||||

│ ├─ fflow.py //option to read, initialize,...

|

||||

│ └─ fmodule.py //model-level operators

|

||||

└─ requirements.txt

|

||||

```

|

||||

|

||||

### Benchmark

|

||||

|

||||

We have added many benchmarks covering several different areas such as CV, NLP, etc

|

||||

|

||||

### Algorithm

|

||||

|

||||

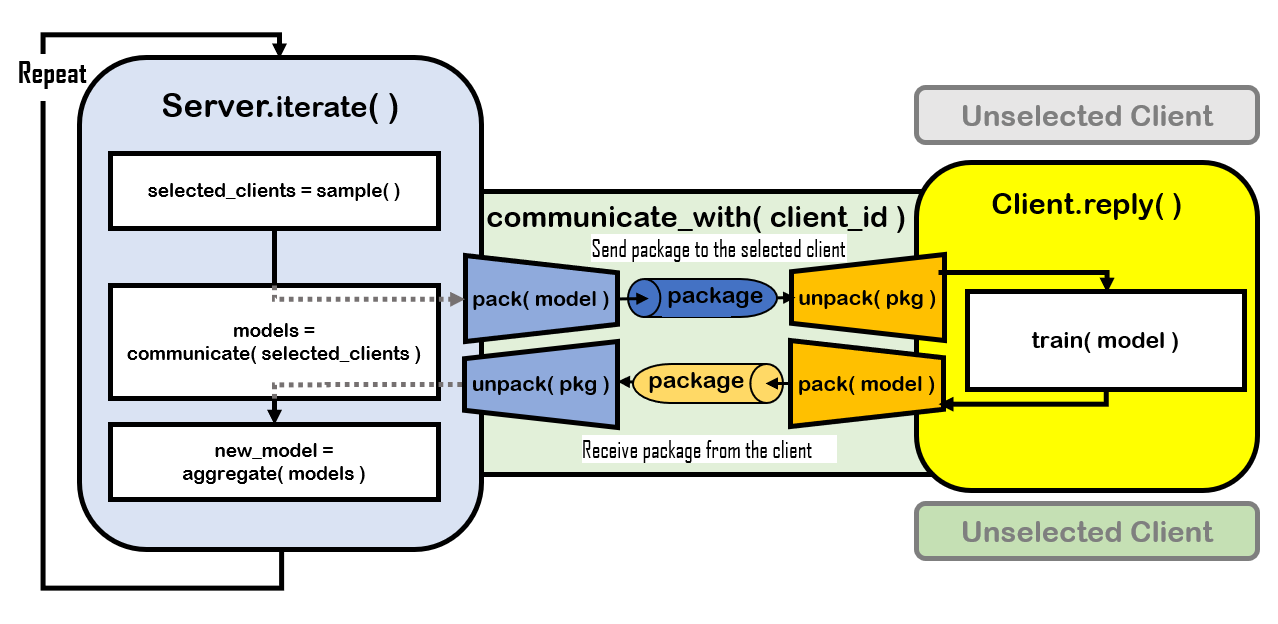

This module is the specific federated learning algorithm implementation. Each method contains two classes: the `Server` and the `Client`.

|

||||

|

||||

|

||||

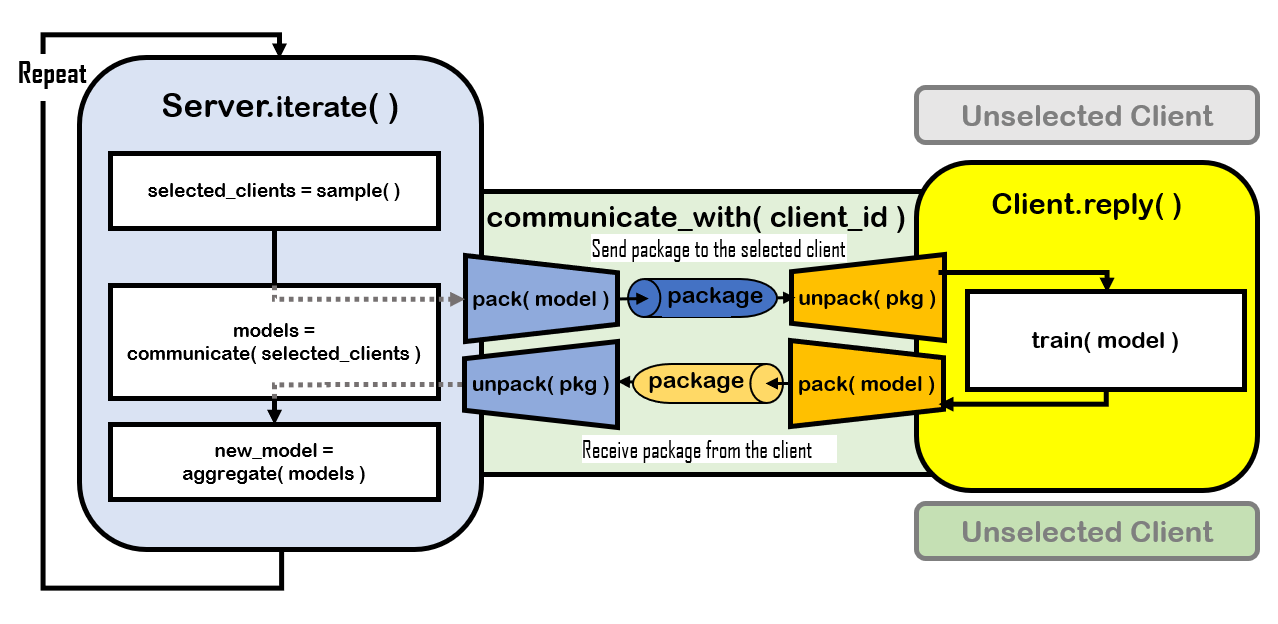

#### Server

|

||||

|

||||

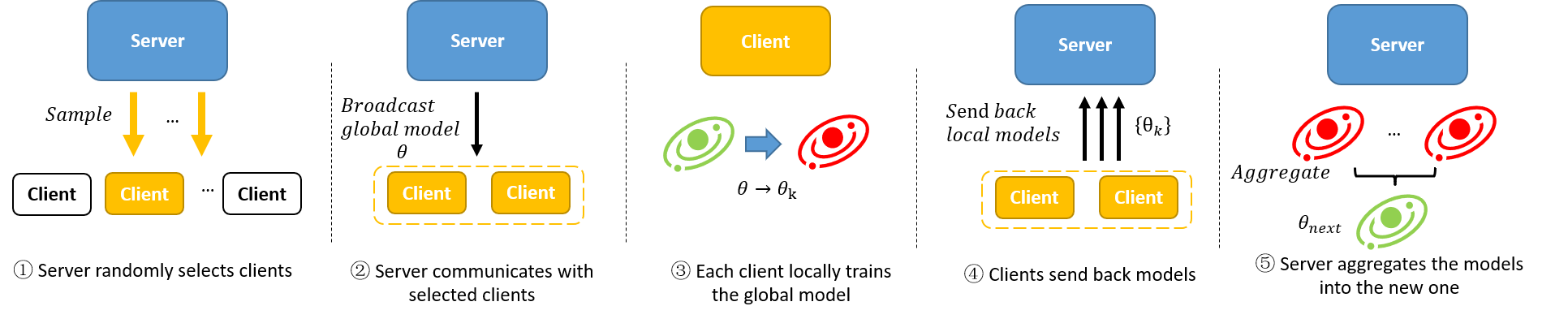

The whole FL system starts with the `main.py`, which runs `server.run()` after initialization. Then the server repeat the method `iterate()` for `num_rounds` times, which simulates the communication process in FL. In the `iterate()`, the `BaseServer` start with sampling clients by `select()`, and then exchanges model parameters with them by `communicate()`, and finally aggregate the different models into a new one with `aggregate()`. Therefore, anyone who wants to customize its own method that specifies some operations on the server-side should rewrite the method `iterate()` and particular methods mentioned above.

|

||||

|

||||

#### Client

|

||||

|

||||

The clients reponse to the server after the server `communicate_with()` them, who first `unpack()` the received package and then train the model with their local dataset by `train()`. After training the model, the clients `pack()` send package (e.g. parameters, loss, gradient,... ) to the server through `reply()`.

|

||||

|

||||

|

||||

### Experiment

|

||||

|

||||

The experiment module contains experiment command generation and scheduling operation, which can help FL researchers more conveniently conduct experiments in the field of federated learning.

|

||||

|

||||

### simulator

|

||||

|

||||

The system_simulator module is used to realize the simulation of heterogeneous systems, and we set multiple states such as network speed and availability to better simulate the system heterogeneity of federated learning parties.

|

||||

|

||||

### Utils

|

||||

|

||||

Utils is composed of commonly used operations:

|

||||

1) model-level operation (we convert model layers and parameters to dictionary type and apply it in the whole FL system).

|

||||

2) API for the FL workflow like gen_benchmark, gen_task, init, ...

|

||||

## Citation

|

||||

|

||||

Please cite our paper in your publications if this code helps your research.

|

||||

|

||||

```

|

||||

@misc{wang2021federated,

|

||||

title={Federated Learning with Fair Averaging},

|

||||

author={Zheng Wang and Xiaoliang Fan and Jianzhong Qi and Chenglu Wen and Cheng Wang and Rongshan Yu},

|

||||

year={2021},

|

||||

eprint={2104.14937},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.LG}

|

||||

}

|

||||

|

||||

@misc{wang2023flgo,

|

||||

title={FLGo: A Fully Customizable Federated Learning Platform},

|

||||

author={Zheng Wang and Xiaoliang Fan and Zhaopeng Peng and Xueheng Li and Ziqi Yang and Mingkuan Feng and Zhicheng Yang and Xiao Liu and Cheng Wang},

|

||||

year={2023},

|

||||

eprint={2306.12079},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.LG}

|

||||

}

|

||||

```

|

||||

|

||||

## Contacts

|

||||

Zheng Wang, zwang@stu.xmu.edu.cn

|

||||

|

||||

|

||||

# Buy Me a Coffee :coffee:

|

||||

Buy me a coffee if you'd like to support the development of this repo.

|

||||

<center>

|

||||

<img src="https://github.com/WwZzz/easyFL/assets/20792079/89050169-3927-4eb0-ac32-68d8bee12326" width=180/>

|

||||

</center>

|

||||

|

||||

## References

|

||||

<div id='refer-anchor-1'></div>

|

||||

|

||||

\[McMahan. et al., 2017\] [Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. Communication-Efficient Learning of Deep Networks from Decentralized Data. In International Conference on Artificial Intelligence and Statistics (AISTATS), 2017.](https://arxiv.org/abs/1602.05629)

|

||||

|

||||

<div id='refer-anchor-2'></div>

|

||||

|

||||

\[Cong Xie. et al., 2019\] [Cong Xie, Sanmi Koyejo, Indranil Gupta. Asynchronous Federated Optimization. ](https://arxiv.org/abs/1903.03934)

|

||||

|

||||

<div id='refer-anchor-3'></div>

|

||||

|

||||

\[John Nguyen. et al., 2022\] [John Nguyen, Kshitiz Malik, Hongyuan Zhan, Ashkan Yousefpour, Michael Rabbat, Mani Malek, Dzmitry Huba. Federated Learning with Buffered Asynchronous Aggregation. In International Conference on Artificial Intelligence and Statistics (AISTATS), 2022.](https://arxiv.org/abs/2106.06639)

|

||||

|

||||

<div id='refer-anchor-4'></div>

|

||||

|

||||

\[Zheng Chai. et al., 2020\] [Zheng Chai, Ahsan Ali, Syed Zawad, Stacey Truex, Ali Anwar, Nathalie Baracaldo, Yi Zhou, Heiko Ludwig, Feng Yan, Yue Cheng. TiFL: A Tier-based Federated Learning System.In International Symposium on High-Performance Parallel and Distributed Computing(HPDC), 2020](https://arxiv.org/abs/2106.06639)

|

||||

|

||||

<div id='refer-anchor-5'></div>

|

||||

|

||||

\[Mehryar Mohri. et al., 2019\] [Mehryar Mohri, Gary Sivek, Ananda Theertha Suresh. Agnostic Federated Learning.In International Conference on Machine Learning(ICML), 2019](https://arxiv.org/abs/1902.00146)

|

||||

|

||||

<div id='refer-anchor-6'></div>

|

||||

|

||||

\[Zheng Wang. et al., 2021\] [Zheng Wang, Xiaoliang Fan, Jianzhong Qi, Chenglu Wen, Cheng Wang, Rongshan Yu. Federated Learning with Fair Averaging. In International Joint Conference on Artificial Intelligence, 2021](https://arxiv.org/abs/2104.14937#)

|

||||

|

||||

<div id='refer-anchor-7'></div>

|

||||

|

||||

\[Zeou Hu. et al., 2022\] [Zeou Hu, Kiarash Shaloudegi, Guojun Zhang, Yaoliang Yu. Federated Learning Meets Multi-objective Optimization. In IEEE Transactions on Network Science and Engineering, 2022](https://arxiv.org/abs/2006.11489)

|

||||

|

||||

<div id='refer-anchor-8'></div>

|

||||

|

||||

\[Tian Li. et al., 2020\] [Tian Li, Anit Kumar Sahu, Manzil Zaheer, Maziar Sanjabi, Ameet Talwalkar, Virginia Smith. Federated Optimization in Heterogeneous Networks. In Conference on Machine Learning and Systems, 2020](https://arxiv.org/abs/1812.06127)

|

||||

|

||||

<div id='refer-anchor-9'></div>

|

||||

|

||||

\[Xinran Gu. et al., 2021\] [Xinran Gu, Kaixuan Huang, Jingzhao Zhang, Longbo Huang. Fast Federated Learning in the Presence of Arbitrary Device Unavailability. In Neural Information Processing Systems(NeurIPS), 2021](https://arxiv.org/abs/2106.04159)

|

||||

|

||||

<div id='refer-anchor-10'></div>

|

||||

|

||||

\[Yae Jee Cho. et al., 2020\] [Yae Jee Cho, Jianyu Wang, Gauri Joshi. Client Selection in Federated Learning: Convergence Analysis and Power-of-Choice Selection Strategies. ](https://arxiv.org/abs/2010.01243)

|

||||

|

||||

<div id='refer-anchor-11'></div>

|

||||

|

||||

\[Tian Li. et al., 2020\] [Tian Li, Maziar Sanjabi, Ahmad Beirami, Virginia Smith. Fair Resource Allocation in Federated Learning. In International Conference on Learning Representations, 2020](https://arxiv.org/abs/1905.10497)

|

||||

|

||||

<div id='refer-anchor-12'></div>

|

||||

|

||||

\[Sai Praneeth Karimireddy. et al., 2020\] [Sai Praneeth Karimireddy, Satyen Kale, Mehryar Mohri, Sashank J. Reddi, Sebastian U. Stich, Ananda Theertha Suresh. SCAFFOLD: Stochastic Controlled Averaging for Federated Learning. In International Conference on Machine Learning, 2020](https://arxiv.org/abs/1910.06378)

|

||||

|

||||

|

|

@ -0,0 +1,29 @@

|

|||

#choose a fitfal version of pytorch image according to your cuda and modify the arguement TORCH_VERSION

|

||||

#Reference website:https://hub.docker.com/r/pytorch/pytorch/tags

|

||||

ARG TORCH_VERSION=1.9.0-cuda10.2-cudnn7-runtime

|

||||

FROM pytorch/pytorch:${TORCH_VERSION}

|

||||

|

||||

# update & configure cuda and pip

|

||||

RUN pip install --upgrade pip \

|

||||

& conda update -n base -c https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge conda \

|

||||

& conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/ \

|

||||

& conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/peterjc123/ \

|

||||

& conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/msys2/ \

|

||||

& conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge \

|

||||

& conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/ \

|

||||

& conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/ \

|

||||

& conda config --set show_channel_urls yes

|

||||

|

||||

#download requirements

|

||||

RUN pip install cvxopt \

|

||||

& conda install scipy \

|

||||

& pip install matplotlib \

|

||||

& pip install prettytable \

|

||||

& pip install ujson \

|

||||

& pip install pyyaml \

|

||||

& pip install pynvml \

|

||||

& pip install pandas

|

||||

|

||||

|

||||

#install flgo

|

||||

RUN pip install flgo

|

||||

|

|

@ -0,0 +1,21 @@

|

|||

|

||||

# How to use the Dcokerfile

|

||||

|

||||

|

||||

- Step 1

|

||||

|

||||

Modify the Dockerfile accorrding to the comment to build a basic pytorch environment

|

||||

|

||||

- Step 2

|

||||

|

||||

Build the images using the command:

|

||||

> $ docker build -t flgo .

|

||||

|

||||

- Note

|

||||

|

||||

- Step 3

|

||||

|

||||

Create a container using the command:

|

||||

|

||||

> $ docker run -itd --gpus all --network=host flgo /bin/bash

|

||||

|

||||

|

|

@ -0,0 +1 @@

|

|||

::: flgo

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.algorithm.decentralized

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.algorithm.fedbase

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.algorithm.hierarchical

|

||||

|

|

@ -0,0 +1,7 @@

|

|||

::: flgo.algorithm

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.algorithm.vflbase

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.base

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

::: flgo.benchmark

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.partition

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.cv.classification

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.cv.detection

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.cv.segmentation

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.graph.graph_classification

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.graph.link_prediction

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.graph.node_classification

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.nlp.classification

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.nlp.language_modeling

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.nlp.translation

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.partition

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.benchmark.toolkits.visualization

|

||||

|

|

@ -0,0 +1,35 @@

|

|||

::: flgo.algorithm

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

||||

::: flgo.benchmark

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

||||

::: flgo.experiment

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

||||

::: flgo.simulator

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

||||

::: flgo.utils

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

||||

|

|

@ -0,0 +1 @@

|

|||

To be implemented...

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.experiment.analyzer

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.experiment.device_scheduler

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

::: flgo.experiment

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.experiment.logger.BasicLogger

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.experiment.logger.BasicLogger

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.simulator.base

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.simulator.default_simulator

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

::: flgo.simulator

|

||||

handler: python

|

||||

options:

|

||||

show_root_heading: true

|

||||

heading_level: 1

|

||||

show_source: false

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.utils.fflow

|

||||

|

|

@ -0,0 +1 @@

|

|||

:::flgo.utils.fmodule

|

||||

|

|

@ -0,0 +1,19 @@

|

|||

# Algorithm

|

||||

In FLGo, each algorithm is described by an independent file consisting of the objects

|

||||

(i.e. server and clients in horizontal FL) with their actions.

|

||||

## Horizontal FL

|

||||

|

||||

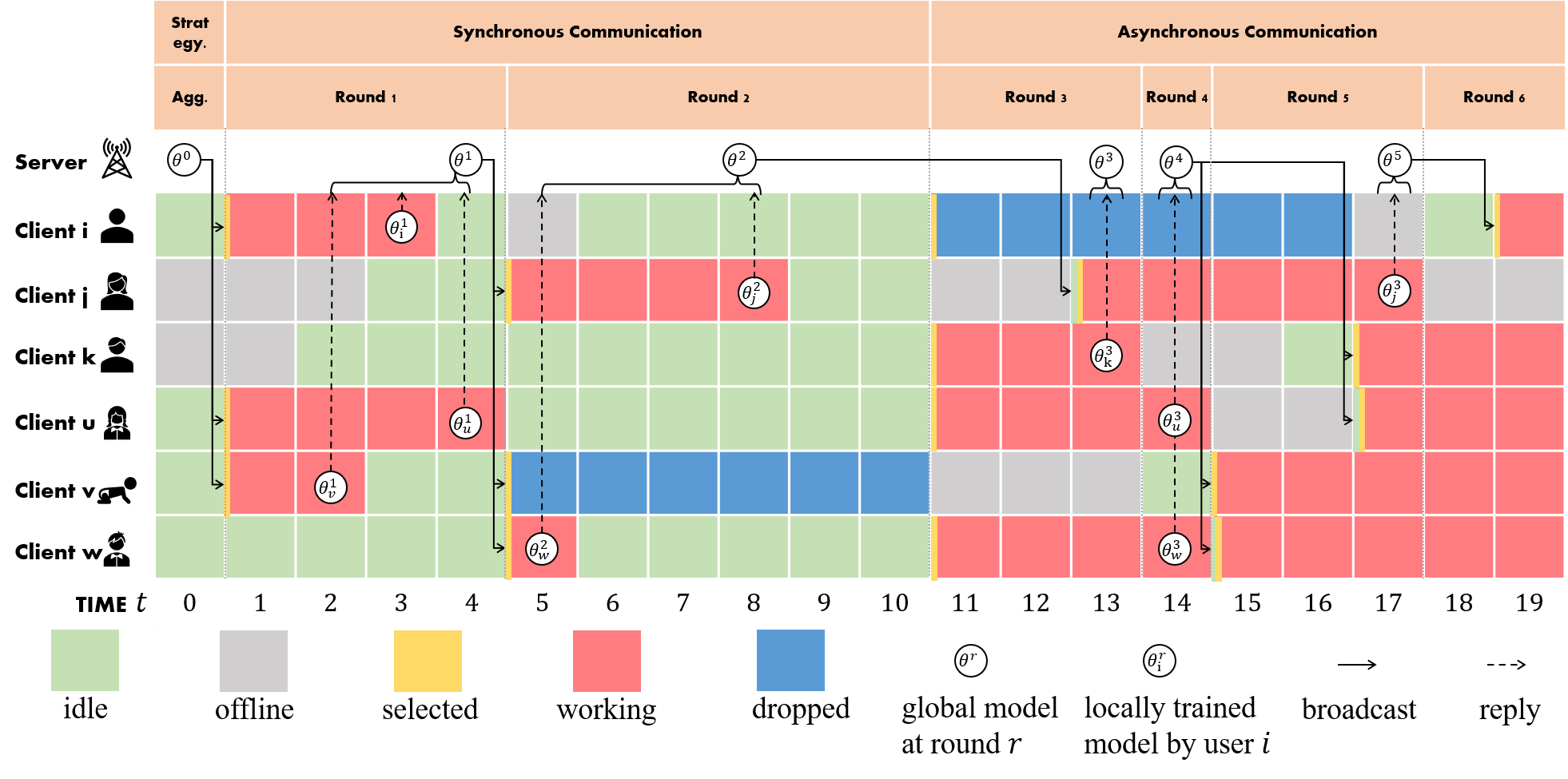

A classical procedure of FL training process is as shown in the figure above, where the server iteratively

|

||||

broadcasts the global model to a subset of clients and aggregates the received locally

|

||||

trained models from them. Following this scheme, a great number of FL algorithms can be

|

||||

easily implemented by FLGo. For example, to implement methods that customize the local

|

||||

training process (e.g. FedProx, MOON), developers only need to modify the function

|

||||

`client.train(...)`. And a series of sampling strategies can be realized by only replacing

|

||||

the function `server.sample() `. We also provide comprehensive tutorial for using FLGo

|

||||

to implement the state of the art algorithms. In addition, asynchronous algorithms can

|

||||

share the same scheme with synchronous algorithms in FLGo, where developers only need to

|

||||

concern about the sampling strategy and how to deal with the currently received packages

|

||||

from clients at each moment.

|

||||

|

||||

## Vertical FL

|

||||

To be completed.

|

||||

|

|

@ -0,0 +1,18 @@

|

|||

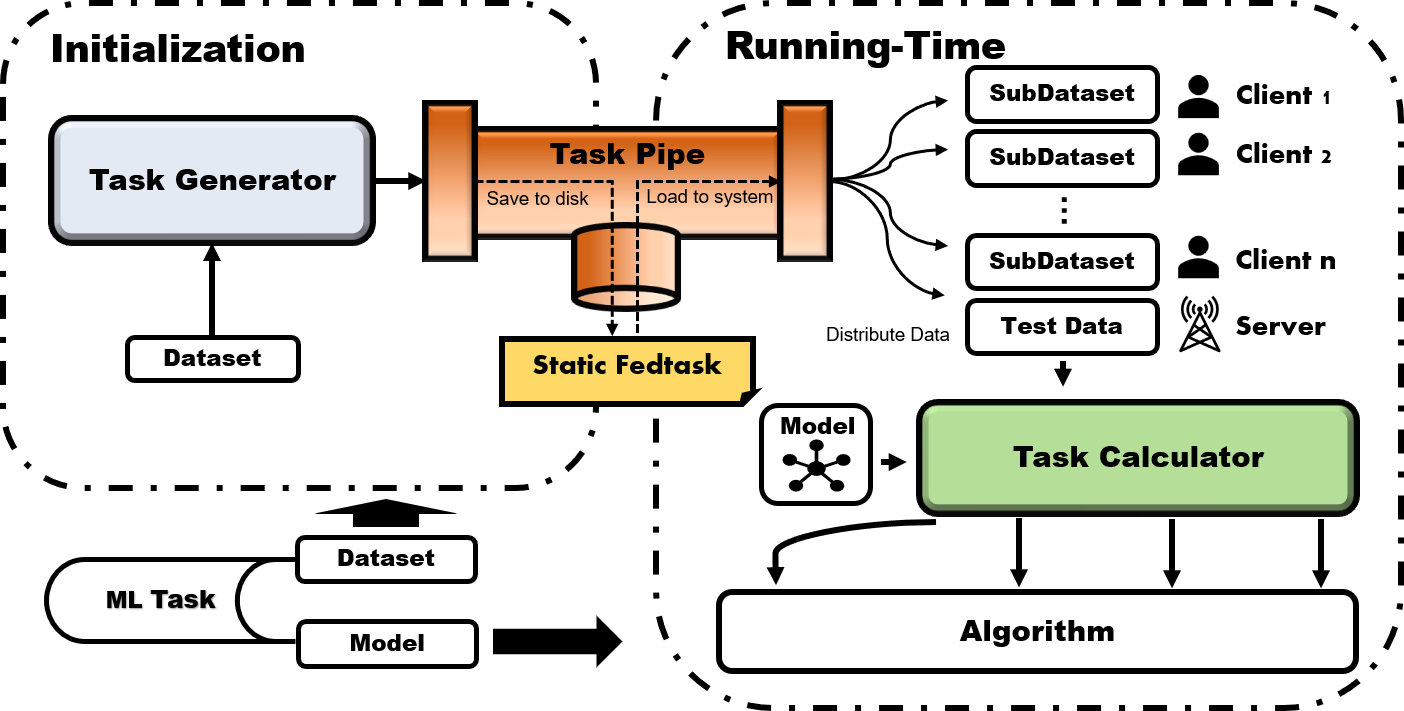

# Benchmark

|

||||

|

||||

|

||||

At the initialization phase, the original dataset is input to `TaskGenerator` that

|

||||

accordingly and flexibly partitions the dataset into local sub-datasets owned by

|

||||

clients and a testing dataset owned the server. And the local data is further divided

|

||||

to training part and validation part for hyper-parameter tuning purpose. Then, all of

|

||||

the division information on the original dataset will be stored by `TaskPipe` into

|

||||

the disk as a static `fedtask`, where different federated algorithms can fairly

|

||||

compare with each other on the same fedtask with a particular model.

|

||||

|

||||

During the running-time phase, `TaskPipe` first distributes the partitioned datasets

|

||||

to clients and the server after loading the saved partition information and the original

|

||||

dataset into memory. After the model training starts, Algorithm module can either use the

|

||||

presetting `TaskCalculator` APIs to complement the task-specific calculations (i.e. loss

|

||||

computation, transferring data across devices, evaluation, batching data) or optimize in

|

||||

customized way. In this manner, the task-relevant details will be blinded to the algorithm

|

||||

for most cases, which significantly eases the development of new algorithms.

|

||||

|

|

@ -0,0 +1,14 @@

|

|||

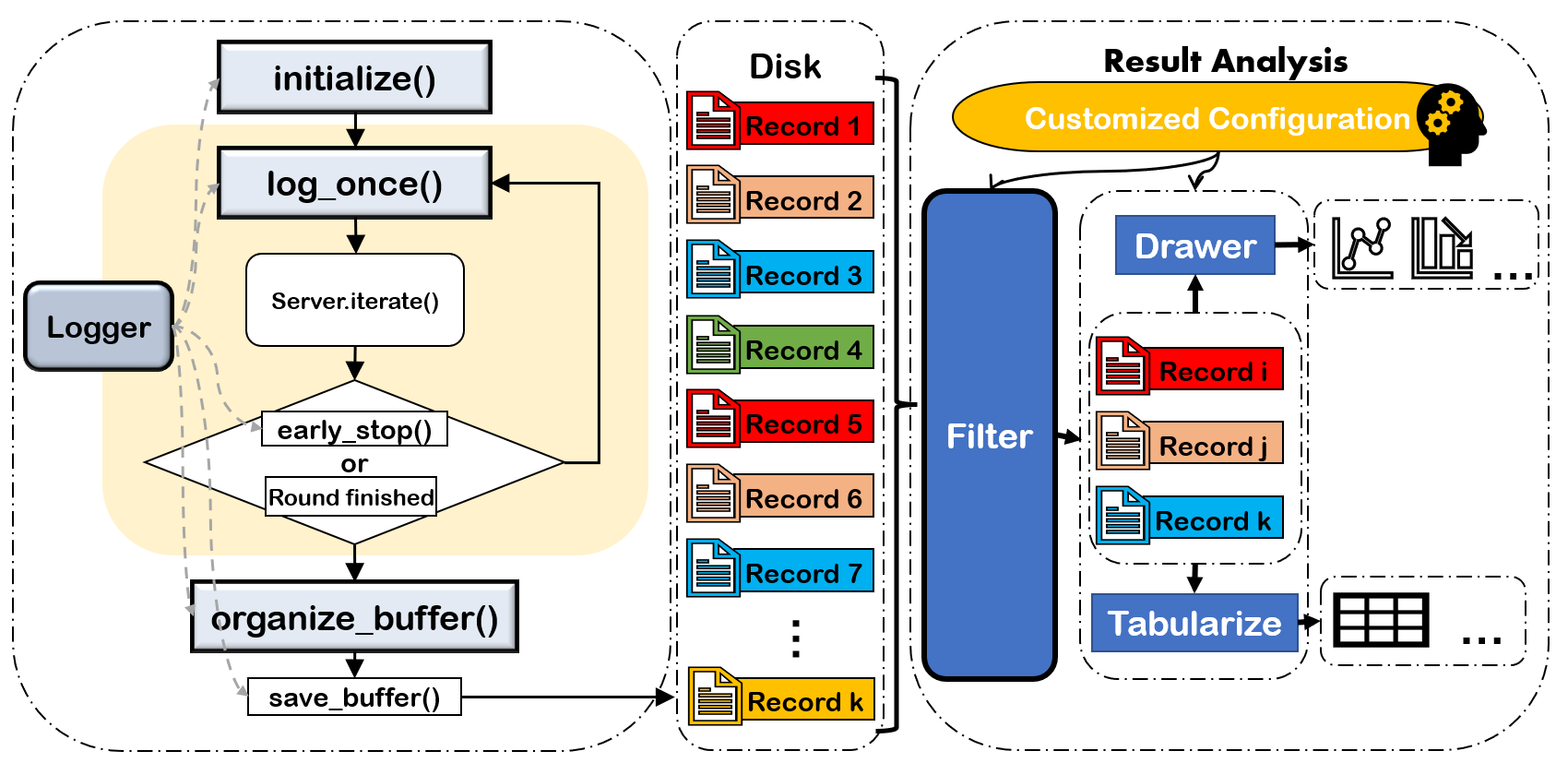

# Logger and analyzer

|

||||

|

||||

Although there are already several comprehensive experiment managers (e.g. wandb,

|

||||

tensorboard), our `Experiment` module is compatible with them and enable

|

||||

customizing experiments in a non-intrusive way to the codes, where users can create a

|

||||

`logger` by modifying some APIs to track variables of interest and specify the customized

|

||||

`logger` in optional parameters.

|

||||

|

||||

After the `logger` stores the running-time information into records, the `analyzer` can read

|

||||

them from the disk. A filter is designed to enable only selecting records of interest, and

|

||||

several APIs are provided for quickly visualizing and analyzing the results by few codes.

|

||||

|

||||

# Device Scheduler

|

||||

To be complete.

|

||||

|

|

@ -0,0 +1,60 @@

|

|||

# FLGo Framework

|

||||

|

||||

|

||||

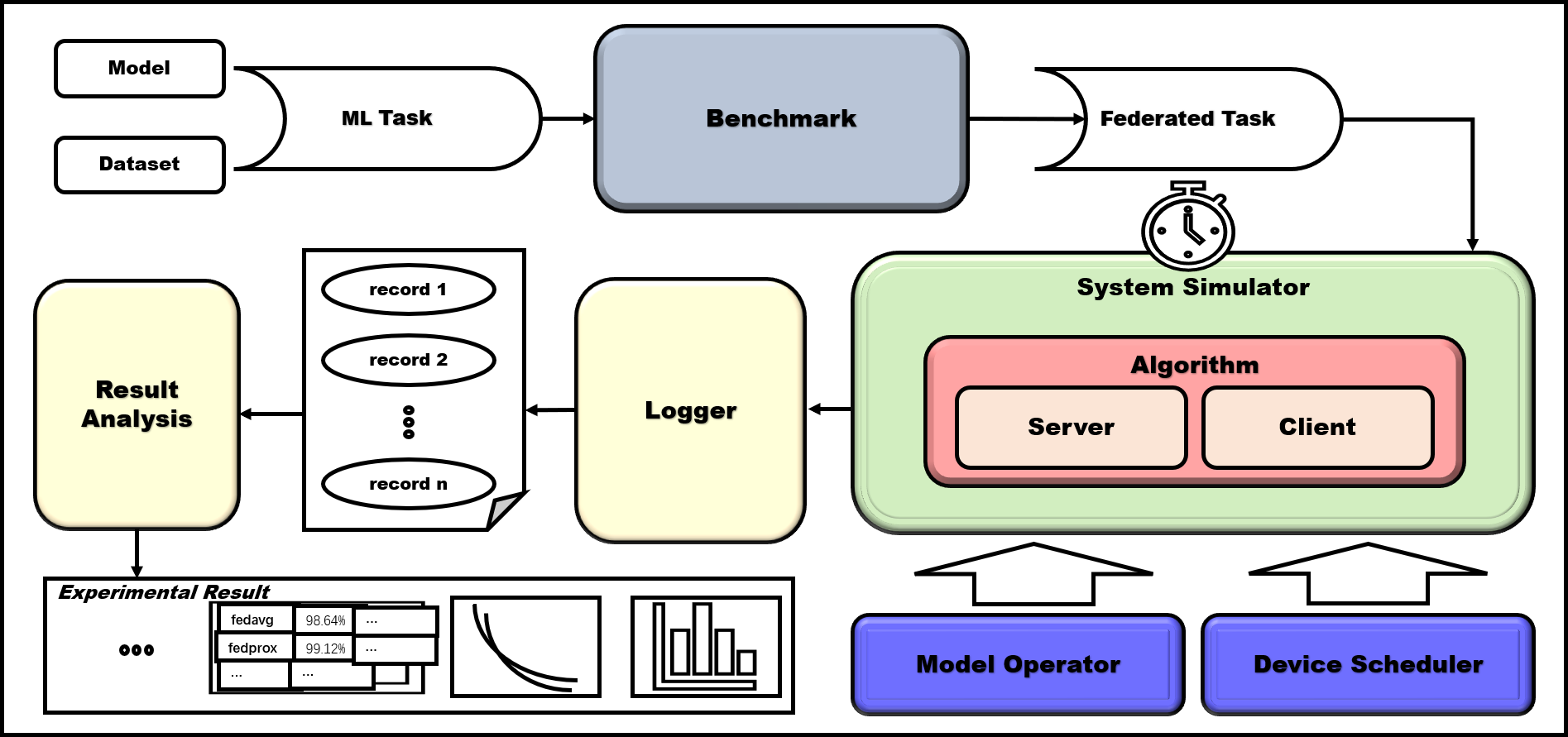

The whole workflow of FLGo is as shown in the above picture. FLGo framework mainly runs

|

||||

by three steps.

|

||||

|

||||

Firstly, given a ML task (i.e. dataset and model), FLGo converts it into a static federated

|

||||

task through partitioning the original ML dataset into subsets of data owned by different

|

||||

clients, and hide the task-specific details to the algorithms.

|

||||

|

||||

Secondly, different federated algorithms can run on the fed static federated task to train

|

||||

a particular model (e.g. CNN, MLP) . During training phase, the system simulator will create

|

||||

a simulated environment where a virtual global clock can fairly measure the time and arbitrary

|

||||

client behaviors can be modeled, which is also transparent to the implementation of algorithms.

|

||||

|

||||

Finally, the experimental tracker in FLGo is responsible for tracing the running-time information

|

||||

and organizing the results into tables or figures.

|

||||

|

||||

The organization of all the modules is as below

|

||||

|

||||

```

|

||||

├─ algorithm

|

||||

│ ├─ fedavg.py //fedavg algorithm

|

||||

│ ├─ ...

|

||||

│ ├─ fedasync.py //the base class for asynchronous federated algorithms

|

||||

│ └─ fedbase.py //the base class for federated algorithms

|

||||

|

|

||||

├─ benchmark

|

||||

│ ├─ mnist_classification //classification on mnist dataset

|

||||

│ │ ├─ model //the corresponding model

|

||||

│ | └─ core.py //the core supporting for the dataset, and each contains three necessary classes(e.g. TaskGen, TaskReader, TaskCalculator)

|

||||

│ ├─ base.py // the base class for all fedtask

|

||||

│ ├─ ...

|

||||

│ ├─ RAW_DATA // storing the downloaded raw dataset

|

||||

│ └─ toolkits //the basic tools for generating federated dataset

|

||||

│ ├─ cv // common federal division on cv

|

||||

│ │ ├─ horizontal // horizontal fedtask

|

||||

│ │ │ └─ image_classification.py // the base class for image classification

|

||||

│ │ └─ ...

|

||||

│ ├─ ...

|

||||

│ ├─ partition.py // the parttion class for federal division

|

||||

│ └─ visualization.py // visualization after the data set is divided

|

||||

|

|

||||

├─ experiment

|

||||

│ ├─ logger //the class that records the experimental process

|

||||

│ │ ├─ ...

|

||||

│ | └─ simple_logger.py //a simple logger class

|

||||

│ ├─ analyzer.py //the class for analyzing and printing experimental results

|

||||

| └─ device_scheduler.py // automatically schedule GPUs to run in parallel

|

||||

|

|

||||

├─ simulator //system heterogeneity simulation module

|

||||